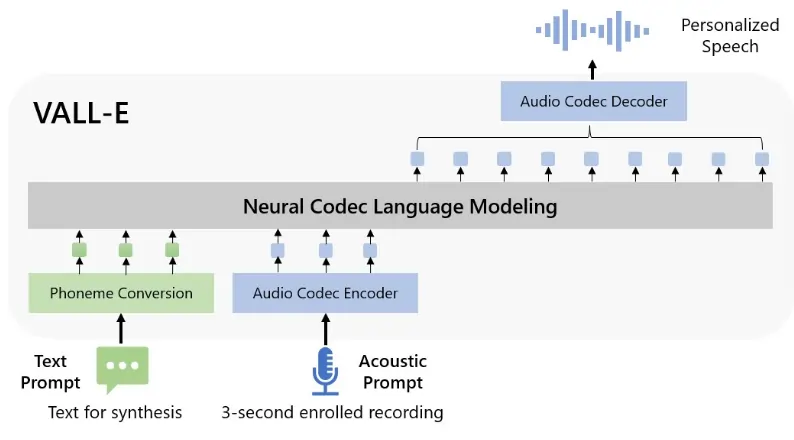

VALL-E is an artificial intelligence (AI) speech synthesizer developed by Microsoft that can closely clone and manipulate a person’s voice. This tool is so advanced that it only requires a three-second voice clip which it can convert into any word, phrase, or sentence.

The algorithm can even mold speech into various emotions and replicate a speaker’s acoustic environment or background noises!

Previously, digital forensics considers a lack of background noise as a telltale sign of an AI-manipulated voice. But VALL-E’s capability to mimic even this detail would provide another layer of challenge for potential victims and investigators.

VALL-E is technically called a “neural codec language model,” which developers trained with 60,000 hours or 6.8 years’ worth of speech from over 7,000 speakers. Its massive speech database came from LibriLight, an audio bank assembled by Meta.

Users may also integrate VALL-E with other generative AI models like GPT-3, which could make it even more sophisticated. But Microsoft isn’t in a hurry to show off and dominate the rapidly emerging AI market.

You might also like: Scientist Used AI to Heal Her Childhood Trauma

Right off the bat, the software giant acknowledged that bad actors would immediately consider using VALL-E for fraudulent applications. For this critical reason, it has decided to delay the public beta release of the tool.

Furthermore, it is not yet clear if Microsoft is also developing a counter program to VALL-E, which could help the public identify and catch voices manipulated by its own sophisticated tool.

The Threats Of AI Voice Synthesizers

Real-Life Fraud Applications

Even before the public became aware of VALL-E, cybercriminals have been using AI-manipulated voices or “deepfake” audio clips to conduct audacious and successful heists.

And with these tools’ proven capabilities, we can only imagine how bad actors would use Microsoft’s tool for their ambitious, bold, and remote attacks.

Take a look at how cybercriminals have been experimenting with these sophisticated AI tools so far.

In March 2019, cybercriminals utilized an AI-powered speech synthesizer to clone a German chief executive’s voice and called his counterpart in the United Kingdom to transfer $243,000.

Hackers were successful on the first fund transfer but failed in their second attempt to funnel more money. The case is considered Europe’s first AI-based crime.

The speech cloning tech, which only existed previously in the realms of Mission Impossible and Detective Conan, has now slipped into the real world, finally teasing everyone about its possible benefits and dangers.

In 2020, cybercriminals successfully cloned a company director’s voice and used it to call a Hong Kong-based manager to transfer $35 million. Read that amount again.

The “man” on the phone was so believable that the manager didn’t think twice about transferring the multi-million dollar amount.

While these two AI-driven heists (if you could call it that) have become successful, the next one has fortunately flopped thanks to an employee’s quick thinking.

In July 2020, Nisos, a cyber security firm, investigated a case involving a manipulated audio sent to an employee of a tech company. In the voicemail, the “CEO” asked an employee to call back to settle an urgent business deal.

Fortunately, the employee found the message suspicious and immediately sent a report to the company’s legal department.

On one side, the outcome of this incident was welcome news, as it saved the tech company from a fraudulent and potentially disastrous deal. But this scenario also showed that an increasing number of cybercriminals are already experimenting with AI-powered tools to conduct heists on vulnerable victims.

Potentially Destructive Applications

We’ve already seen how voice synthesizers that are less sophisticated than VALL-E can cause havoc for several companies. With the success of these AI-powered heists, we should already expect that cybercriminals would attempt to repeat these types of attacks.

In what ways or magnitudes, nobody knows. But what we can do is anticipate possible areas where these faceless criminals may deploy their next AI-assisted assault.

Compromise Bank Accounts

An increasing number of banks worldwide are now adapting voice biometrics as an additional layer of protection for their customers. Apart from their account numbers, customers are also required to do voice log-ins to access their accounts.

But through the continuous advancements of AI speech synthesizers such as VALL-E, these tools may soon easily clone customers’ voices and remotely conduct unauthorized fund transfers.

Rig Crime Evidence

With a smart tool that can manipulate voices into saying anything, this could potentially influence and misguide crime investigations.

This manipulation could protect perpetrators, reduce their crime involvement, or worse, indict an innocent person. Ultimately, it could change the outcome of criminal charges which would favor the ones who are operating the AI tool.

Trigger National Instability

Political groups could also use AI speech manipulators to defame specific people and sway public opinions.

We’ve already seen how political entities have used social media to the fullest to gain unethical advantages, but sophisticated voice synthesizers could be a more potent AI for spreading propaganda.

They may trigger local and even national instability once these tools fall into the wrong hands.

Dupe Family Members

“Grandma scams” refer to a fraud where someone uses a family member’s name to funnel money from an unsuspecting person. But this classic scam could soon become more sophisticated with AI-manipulated voices.

With the majority of the public still unaware of these advanced voice manipulators, bad actors may take advantage of this situation to net as many victims as they can. After all, would you suspect anything if you’re “ailing mother” calls you and ask for money?

How To Detect AI Voices?

Manipulated AI voices could be one of our society’s biggest threats in the future. Fortunately, there are ways to detect these fraudulent voices, from using advanced tools to conducting simple preventive measures that anyone can take.

Use Advanced Tools Like Spectrum 3D

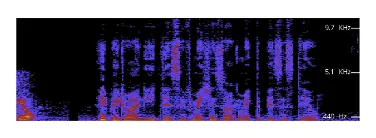

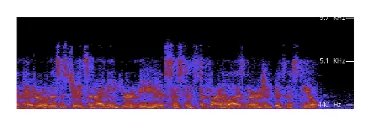

In Niso’s case, it dissected the voicemail message using a tool called Spectrum3D. By using this software, the firm has discovered key abnormalities in the fraudulent message that may not be recognized even by trained ears.

According to its findings, it detected a choppy voice, lack of natural background noise, inconsistencies in pitch and tones, and a high probability that perpetrators used a text-to-speech (TTS) system.

Overall, the entire audio message was low-quality, leading forensics to suspect that cyber criminals were just experimenting with this fraud and hoping that someone would eventually take the bait.

Prepare “Challenge Questions”

Companies, businesses of any size, and everyone must prepare challenge questions that someone should deploy once a suspected AI voice starts communicating with them. These are sets of questions that only one person or carefully selected people can answer.

Voice Liveness Detection

Voice liveness detection (VLD) is a test to see if the other person on the line is live and isn’t just an audio message manipulated by artificial intelligence. You can ask the person to say random words or phrases to see if they are real humans.

However, note that these ways can only reduce the chances of being duped by AI voices; in other words, they are not absolute solutions against these smart algorithms.

But this shouldn’t discourage you. As citizens, we should use every defense we could utilize against these high-tech frauds to protect ourselves and our society from the faceless criminals hiding in the cyber realm.

Join our newsletter as we build a community of AI and web3 pioneers.

The next 3-5 years is when new industry titans will emerge, and we want you to be one of them.

Benefits include:

- Receive updates on the most significant trends

- Receive crucial insights that will help you stay ahead in the tech world

- The chance to be part of our OG community, which will have exclusive membership perks