The hype around the newly-released GPT-4 is in-sane, and everyone can’t help themselves but talk and be awed about this new large language model (LLM) from OpenAI.

And I’m with you guys!

But one major misconception that almost everyone (especially the so-called influencers) seems to be getting wrong is pitting ChatGPT against GPT-4.

First off, let’s clarify this one.

Rather than comparing ChatGPT with GPT-4, what people should be differentiating instead is GPT-3.5 and GPT-4, the two language models that now run on ChatGPT Plus. ChatGPT itself is merely (no offense) a chatbot that houses and executes these two AI models.

And in case you’ve seen an article or even an influencer harping the ‘ChatGPT vs. GPT-4’ battlecry, it’s a dead giveaway that they’re clueless about what they’re talking about. Leave ’em.

Now that this misconception is out of the way, what should we expect with GPT-4, then?

GPT-4 could potentially dwarf GPT-3.5 in terms of applications as its visual capabilities can possibly open the floodgates for new services and platforms.

In fact, this particular feature alone could spread to different industries, including the disability service sector, healthcare, education, communication, and more.

Moreover, its ability for deeper reasoning would certainly have a lot of valuable applications that startups, businesses, and individuals would soon discover.

Unfortunately, as much as we want to peel out the technical improvements of this new language model, OpenAI has been ironically ‘closed’ or privy with this info.

Here’s an excerpt from its GPT-4 paper:

“This report contains no further details about the architecture (including model size), hardware, training compute, dataset construction, training method, or similar.“

The reason for this, according to OpenAI, stems from the safety implications of its new LLM and the growing competition of AI platforms across the industry.

But what’s beyond doubt right now is that it will be a ‘multimodal’ model, which means it can soon accept text and image inputs simultaneously. And the ability to analyze them with a (close-to-human) level of judgment.

As for GPT-4’s parameter size, OpenAI hasn’t disclosed it publicly. But it gave some clues that it did use a larger parameter by mentioning that the new LLM now has ‘broader general knowledge’ and ‘leverages more data and more computation.’

For GPT-4’s additional layer of security, OpenAI trained it with a process called Reinforcement Learning from Human Feedback (RLHF) which uses human feedback to course correct the AI model.

This specific training process was crucial in turning the base GPT-4 model (with potential risks) into a safe model that the public could use.

Now that the basics have been taken care of, it’s now time to make a deep dive into the mindblowing improvements of GPT-4 from its predecessors.

Indeed, calling these new capabilities ‘major upgrades’ is definitely an understatement. Alright, that’s a bit over the edge, and before I could utter another exaggeration here, let’s get into it!

1) Image Analyzation

Out of all the new features of GPT-4, the upcoming image analyzer is probably its most sought-after capability. So let’s put this at the very top.

While you may have already seen some people posting the new language model’s image capabilities, they are actually improvised processes.

GPT-4 is not just a language model; it’s also a vision model.

Greg Brockman, OpenAI President and Co-founder

OpenAI clarified that GPT-4’s image processing is “not yet publicly available.”

Moreover, even though it will soon be capable of accepting a combination of text and image inputs, it will still remain a text-only generator.

But while this much-awaited feature is still in the preview phase, the company gave ChatGPT fans a sneak peek, both in its demo and paper, of how it would actually work.

Let’s start with the demo.

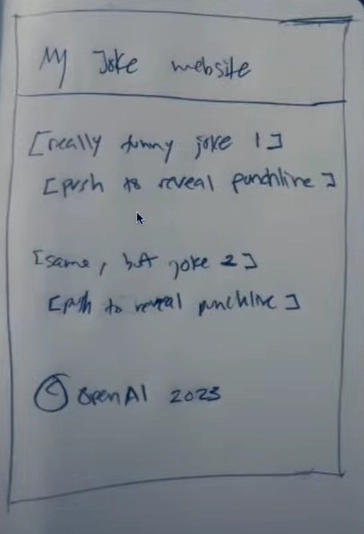

In the now viral part of the demonstration, OpenAI President and Co-Founder Greg Brockman took a shot of his messy handwriting about an idea for a website.

He then uploaded this image and fed GPT-4 with this short and straightforward command:

“Write brief HMTL/JS to turn this mock-up into a colorful website, where the jokes are replaced by two real jokes.”

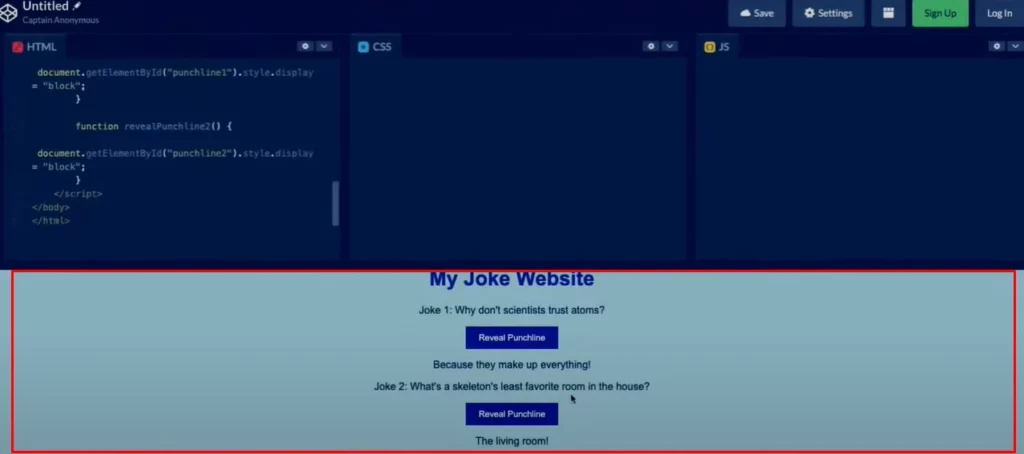

And voila! GPT-4 seamlessly created a mock-up website ーout of a not-so-neat handwriting. Neat!

Now, let’s take a look at some more examples.

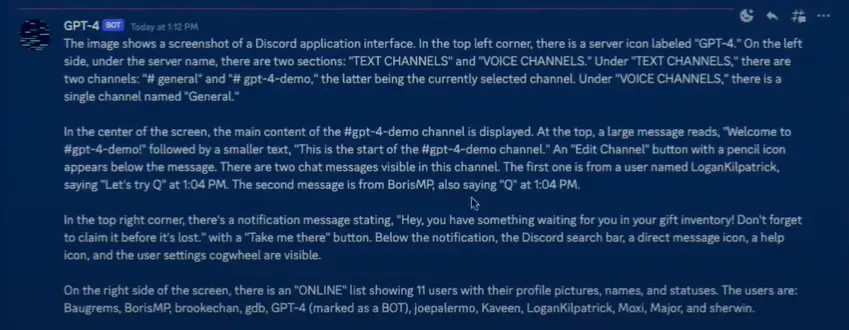

This time, Brockman tested GPT-4’s ability to analyze the contents of an image.

For this quick experiment, he uploaded a screenshot of GPT-4’s Discord account and let the language model analyze it. And it didn’t disappoint.

By giving the prompt: “Can you describe this image in painstaking detail?” GPT-4 gave this impressive output.

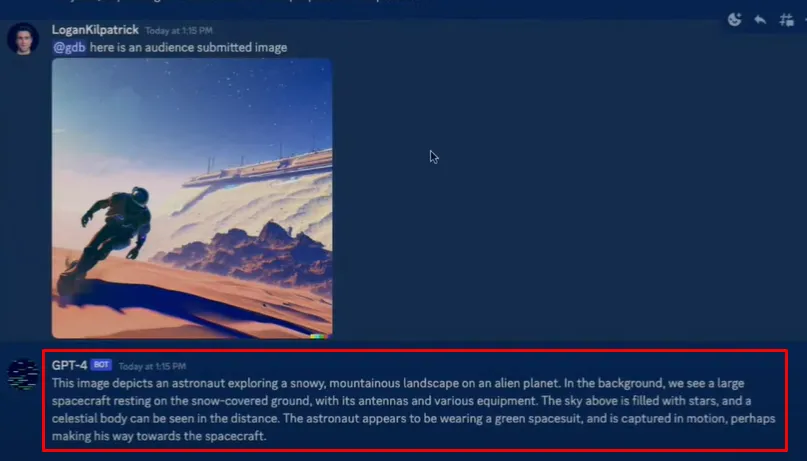

Here’s another example of its detailed description:

Just imagine the hundreds, if not thousands, of applications that could be unleashed once this feature comes out.

But there’s another aspect of its image feature that is equally impressive, which is the ability to understand the context of a given picture.

We’re now talking about not just content but context, a complicated aspect that even tech assistants have struggled to understand for years.

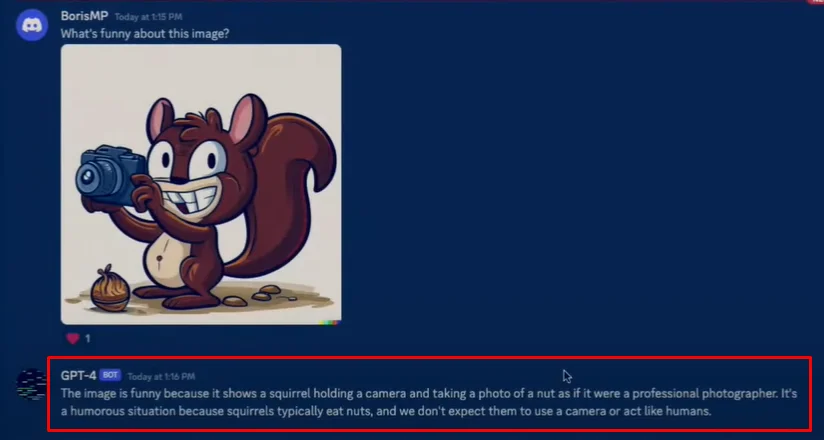

In the live experiment, GPT-4 was fed with an image and was asked what was funny about it. And (un)surprisingly, it gave a quick and accurate answer.

Now, let’s take a look at OpenAI’s image experiment on its GPT-4 paper. Just to be transparent, I’m quite hesitant to show these results as they were not done in a live demo.

But since they came straight from the company’s technical report, let’s hope that these outputs could seamlessly be done in a real-world setting.

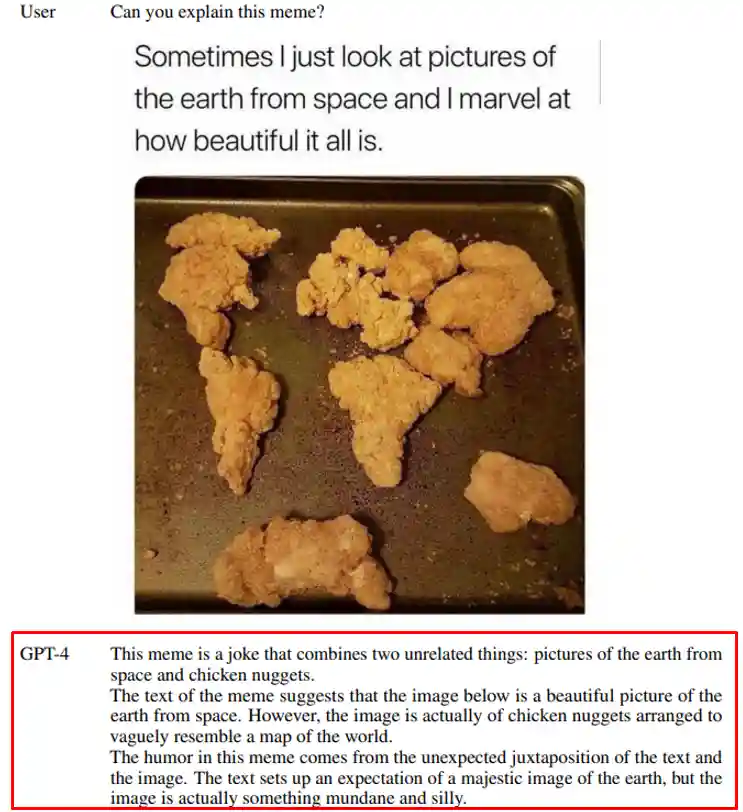

The following images were fed to GPT-4, and it was asked to identify their unusual and funny aspects.

The language model’s responses are the ones inside the red box.

For this one, GPT-4 accurately answered what’s odd in this picture. Kudos to the man for this heroic act.

For this one, it not only identified what’s unusual in the image but also the context of the meme.

Indeed, while GPT-3 and 3.5 are just steps away (in terms of numbering) from GPT-4, the capabilities you’ve seen above are definitely miles away from the two previous models.

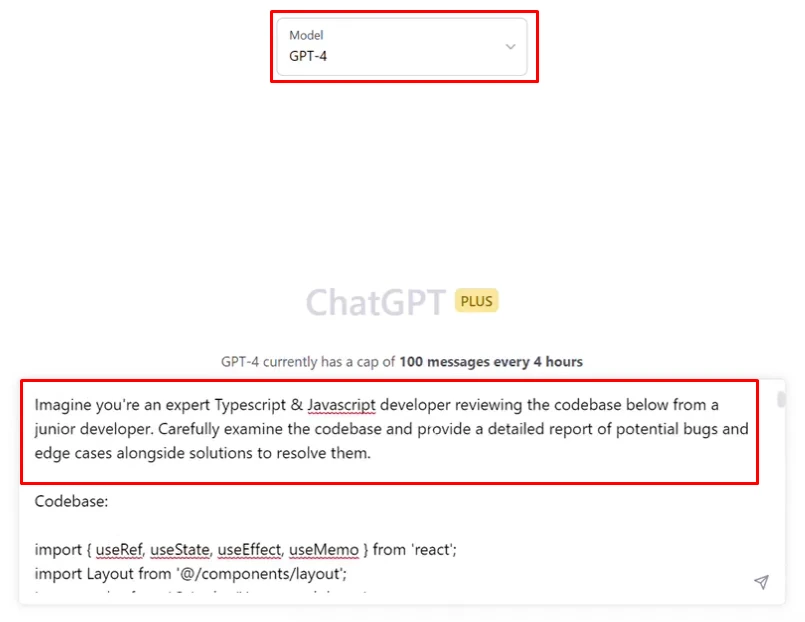

2) A More Advanced Code Generator

I literally had to stop writing for a minute and digest the unbelievably massive potential of this capability: Soon, almost everyone can build websites, mobile applications, and chatbots even without learning how to code.

Now, GPT-4 not only generates more sophisticated code based on text prompts but also teaches users how to debug and test them on testing sites like Replit.

As a result of these enhanced programming abilities, the LLM could potentially create a new revenue stream for a notable fraction of the world’s potential. Yes, AI may turn a long list of jobs obsolete soon, but it can also create new ones as more advanced abilities like these get rolled out.

Of course, learning the concepts of code-building is still crucial for creating a secure and well-functioning digital platform. Moreover, there are also other nitty-gritty aspects that creators must deal with, which, sometimes, are outside an AI’s control.

But the automation of code-creation lifts a heavy burden for people who have brilliant ideas but are rather intimidated by the skills required to create such platforms.

Now, the learning curve for coding has been significantly reduced, which lays the ground for the next generation of creators.

For the next part, we’ll talk about the AI’s ability for in-depth summarization.

OpenAI has integrated more creativity and deeper analysis in GPT-4, allowing it to summarize short to dense content in a more interesting and valuable format.

3) Infusing Creativity

We’ve all been spoiled by ChatGPT’s quick and effortless summarization capabilities. Hands down, it is truly helpful and innovative, but it has a key limitation that was shown in the demo: Creativity.

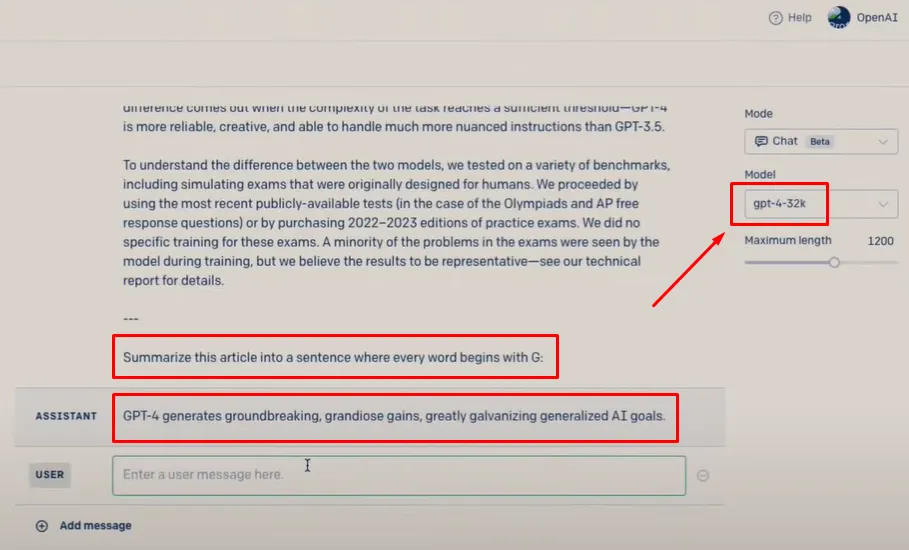

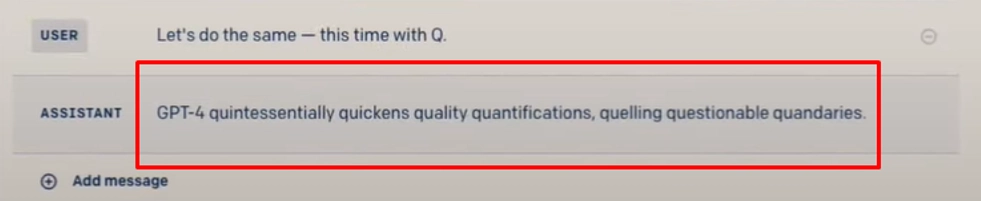

In the demonstration, Brockman asked the older model (GPT 3.5) to summarize a GPT-4 blog post, but with a twist!

He asked the model to summarize the article into a sentence where every word begins with ‘G.’

And guess what? GPT 3.5 quickly gave up!

But when he switched to GPT-4 and asked the same prompt, the new model quickly jumped into the challenge and generated an answer.

In fact, it even accommodated a prompt from one of the viewers and created a summary with each letter starting with ‘Q’, which is much harder than ‘G’.

Okay. Some might even ask (and complain): “Who would even need that ridiculous way of summarizing?”

Well, what’s being demonstrated here isn’t GPT-4’s ability to create a summary that has the same first letters on each word.

It’s the ability of GPT-4 to execute a deeper level of creativity. With this new version, GPT is not just a cold-blooded machine anymore that merely automates a user’s tasks. It has now evolved into an assistant that can also add more flair to its generations.

The ‘same-first-letter-in-each-word’ challenge is just a sneak peek of GPT-4’s creativity, which users could definitely unleash in the coming weeks. Or even days after you read this one.

4) A More Thorough Comparison of Data

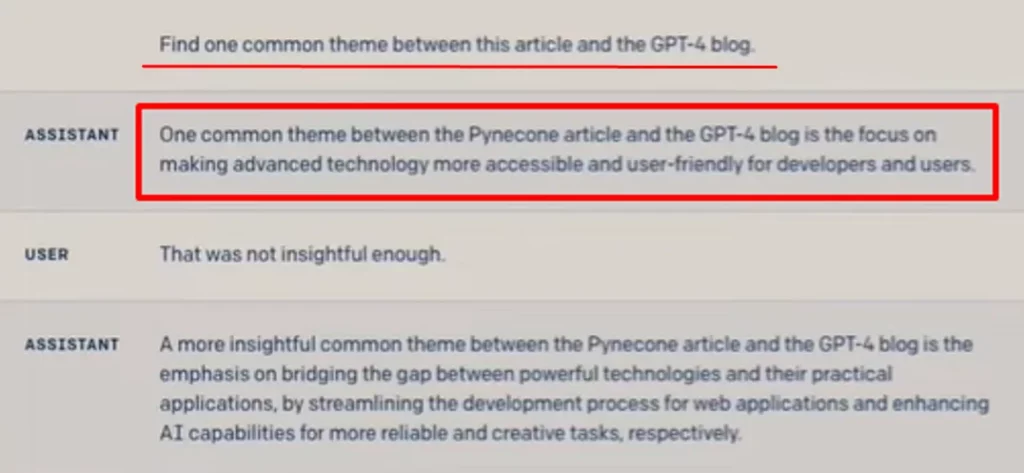

After having some fun with the challenge, Brockman then proceeded to the next experiment: The comparison of two different articles.

For this one, he sought GPT-4’s help analyzing two articles and asked about their common theme. The first article is about GPT-4, and the second one revolves around Pynecone, a full-stack Python framework.

As you may have expected, GPT-4 didn’t find the task so hard and immediately generated a response. And in case you’re not satisfied with the results, you can always ask it to go deeper and make its output more insightful.

Of course, this task can also be done in GPT-3.5.

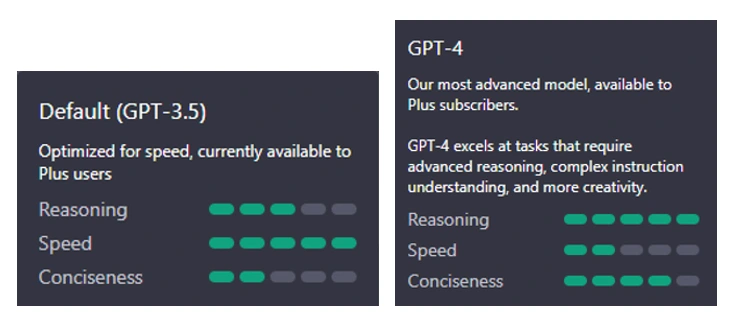

But one major (yet subtle) difference you can gain from the new model is its higher level of reasoning and conciseness. There is a reduction in speed, though, but in my honest take, it’s a small price to pay for the advanced features I can get.

5) Safer and Secure

According to OpenAI, it has spent six months just to calibrate and make GPT-4 safer and more secure than GPT-3.5, the poor language model that has been jailbroken countless times.

The stats are pretty good and, hopefully, would manifest in a real-world setting.

The company says that compared to GPT-3.5, GPT-4 is now 82% less likely to follow unethical requests, like medical advice and self-harm.

Moreover, it is 40% more likely to generate factual results, which means it could have lesser moments for hallucinations.

But we can expect these stats to improve as OpenAI intends to improve GPT-4 over time (in phases), just like what it did with ChatGPT.

6) Sharper In Acing Exams

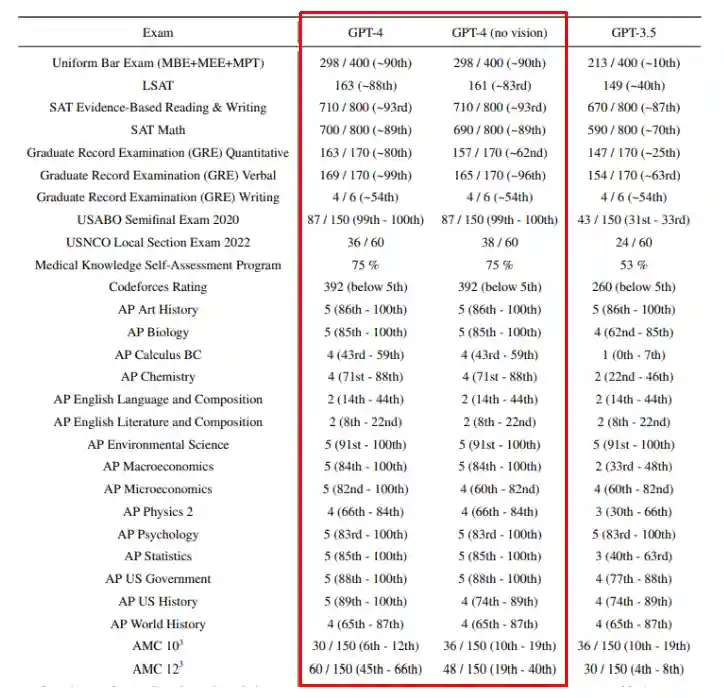

In OpenAI’s simulated bar exam, GPT-4 achieved an average score of the test’s top 10% takers. To see how large this improvement is, GPT 3.5 got an average score of the exam’s bottom 10%.

The difference between their performance is clearly wide.

GPT-3.5 and GPT-4’s differences are almost unnoticeable at first glance, but when both of them start handling bigger and more complex data, OpenAI’s latest model would certainly stand out, revealing its vast improvements over its predecessors.

For the next experiment, OpenAI tested GPT-4’s performance on exams with different languages.

For this one, it utilized an MMLU (Massive Multitask Language Understanding), a more complex benchmark, to test a language model’s performance.

This MMLU contains 14,000 multiple-choice problems that cover 57 subjects. It then translated these 14K questions into various languages with the help of Azure Translate.

The results revealed that GPT-4 aced 24 of 26 languages in this test and even outperformed the English language performance of GPT-3.5, Google’s PaLM, and DeepMind’s Chinchilla!

7) Customized Tones

GPT-4 API will soon allow users to customize their output’s tone, style, and verbosity to adapt to the needs of their target recipients.

Note: The new API is yet to be released, but you can sign-up on its waitlist and access it as soon as it rolls out.

Going back to GPT-4’s message customizability, why does it matter in the first place?

To put some context, ChatGPT only provides a fixed tone, style, and length of messages (Iit is actually capable of variety, but you have to go through some cumbersome and rigid steps, as it was not designed for this ability).

This may not be a problem since it can quickly generate outputs, which is ideal for many (if not all) situations.

But the ‘uniform personality’ of its messages may prove to be less desirable when used in the real world.

Why? Because adopting a tone and style is crucial for business owners and service providers, as these adaptions can help them compete in various demographics and secure their positions on their target audience.

8) Helps You Understand Complex Topics

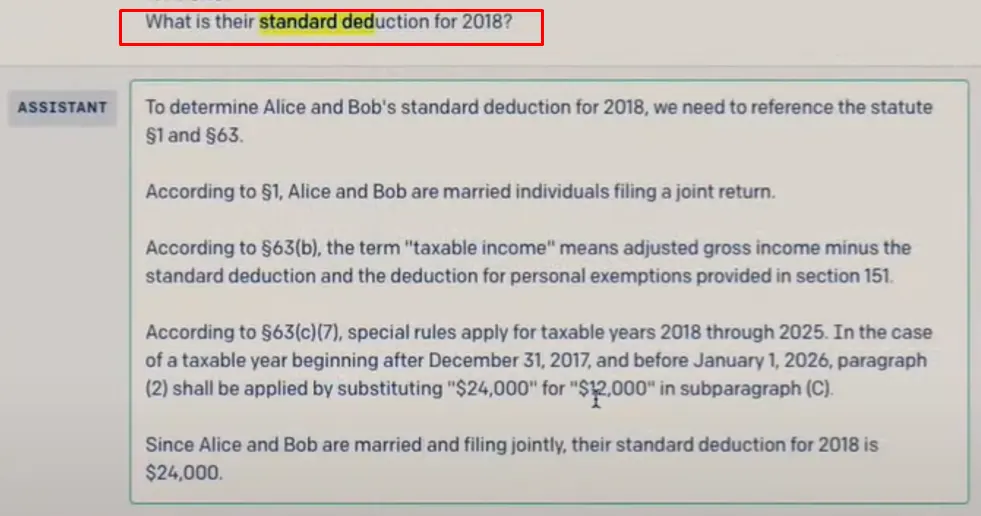

It’s rare to find someone who will find extreme delight in reading and understanding a 16-page tax code. But in case we need to deal with dense and complex content like this, GPT-4 can help us understand it better.

In the demo, the OpenAI co-founder fed the language model with around 16 pages of tax code and gave specific instructions.

If you already reached this point in the article, it comes as no surprise anymore that GPT-4 can seamlessly generate a decent answer for this multifaceted task.

While this is tempting to do, Brockman reminded everyone that GPT-4 is not a tax professional, and a human tax advisor is always necessary when dealing with taxes.

But one question that really bugs me in this demo is that, was it really right?

Do you still remember Microsoft’s ‘New Bing’ demo, with its seemingly smooth and accurate features, but turned out to be filled with inaccurate computations? (It has been using GPT-4 all along!)

With that surprisingly error-filled demonstration, I have some reservations about this tax demo, as it can possibly have some major flaws that tax experts might call out soon.

Check this out next: 9 Mind-Blowing Ways People Are Utilizing GPT-4 Today

Join our newsletter as we build a community of AI and web3 pioneers.

The next 3-5 years is when new industry titans will emerge, and we want you to be one of them.

Benefits include:

- Receive updates on the most significant trends

- Receive crucial insights that will help you stay ahead in the tech world

- The chance to be part of our OG community, which will have exclusive membership perks