Anand Mahindra, the billionaire Chairman of Mahindra Group, is an active social media user. He shares various content, from motivational and funny posts to tech updates, reaching out to over 10 million followers. But one Saturday, his tweet sparked alarm after sharing an AI-generated deepfake clip.

Deepfake videos use AI to generate a digital face that resembles or looks closely similar to a person of interest. Although clips are the most common form, users can also apply deepfake technology to photos and sound recordings.

One of its examples is Jukebox of OpenAI, the company behind ChatGPT, which produces a similar sound to their target artists but in different styles. This technology can open many doors to creativity, but it doesn’t rule out the possibility of misuse once in the wrong hands.

The same applies to the deepfake video Mahindra posted, especially since it freely circulates the Internet without any restrictions. That’s why Mahindra’s post served as a warning, raising concerns about how they can combat deceptive content.

The Viral Deepfake Video

Mahindra wasn’t the only one voicing his concern after the deepfake video; even his followers agreed on the risks it could bring, specifically in the media. If you’re curious to see, here’s the exact clip roaming around the Internet:

Beebom, a company focusing on tech news and reviews, originally posted the video on its Instagram account. Its content strategist, Akshay Singh Gangwar, explains the incorporated AI concept in the post.

You’ll notice that the AI has followed everything he does, from hand movements to facial expressions. He even used familiar faces, one of which is Robert Downey Jr., who played Tony Stark (Iron Man) in the MCU.

But if you look closely, some slight discolorations and misfit parts of the faces make viewers quickly determine that it’s an AI-generated video. Gangwar has a response to it, stating that its imperfections were due to the lack of iterations to train the tech. However, he notes that with additional training, the technology could improve to the point where it would be almost impossible to tell that it is a deepfake.

Gangwar’s content is an eye-opener of how far AI tech can go. At the same time, he carefully reminded everyone to use and handle deepfake videos with caution.

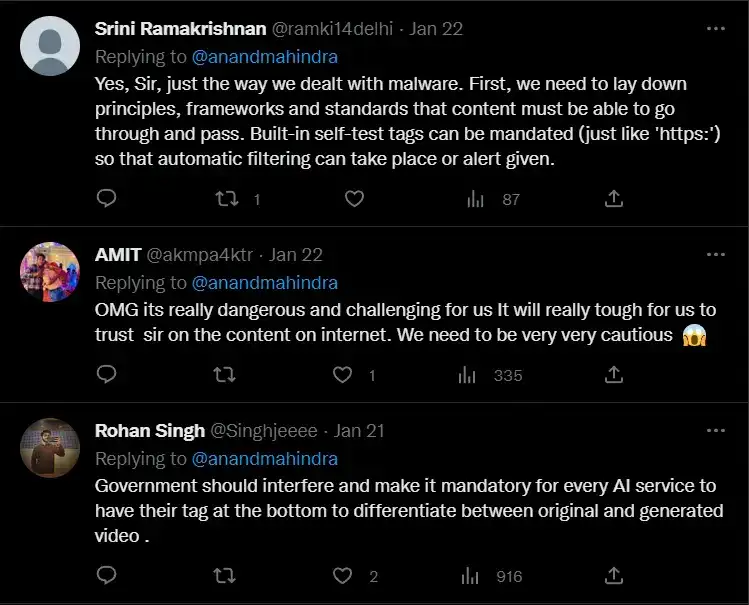

AI-powered fake media has existed for several years, but Mahindra large influence can help raise more awareness of the potential of AI to be used in spreading misinformation. Surprisingly, some were even eager to share their insights on what measures to take.

World’s Response to Deepfakes

Users can undoubtedly abuse the potential of deepfake technology in different ways, one of which is Mahindra’s main concern: deceptive content. While this can pose a significant threat to the Internet’s credibility, the world’s solution came slowly, with only a few countries implementing rules.

Ramesha Doddapura, a political journalist, also shared an image from an article in The Hindu. His comment states that India has no current laws to combat deepfakes.

But Rajiv Kumar, the Chief Election Commissioner of India, recently initiated a talk with other countries concerning the spread of synthetic media and the use of AI-powered tech to detect them.

Furthermore, China took a step in regulating the deepfake tech, which became effective last January 23, 2023. Some key focuses include the need for consent and strict prohibitions on various aspects, like disseminating fake news, publishing content without labels, etc.

The EU also has the Strengthened Code of Practice on Disinformation. It tackles penalties, rewards, and other solutions, as its a measure to safeguard users against the misuse of tech.

The proliferation of fake videos revealed the urgent need for effective regulations and solutions to ensure their safe and responsible use. While some countries have taken initial steps to address the issue, it’s clear that there’s still more work to do.

As deepfake technology continues to evolve, laws and regulations must be updated to keep pace with these developments. This way, the integrity of information and trust in the digital world can be preserved and protected.

You might also like:

- The Chilling Potential of ChatGPT for Criminal Activities

- AI-Powered Sexbots and the Risks They Could Trigger in the Future

Join our newsletter as we build a community of AI and web3 pioneers.

The next 3-5 years is when new industry titans will emerge, and we want you to be one of them.

Benefits include:

- Receive updates on the most significant trends

- Receive crucial insights that will help you stay ahead in the tech world

- The chance to be part of our OG community, which will have exclusive membership perks